It’s time for the quarterly report but with progress happening only under the hood a new video did not make much sense. So here’s a quick blog summary instead, and a detailed devlog demonstrating the “opods” in action will follow as soon as the system gets operational! 🧑🔧

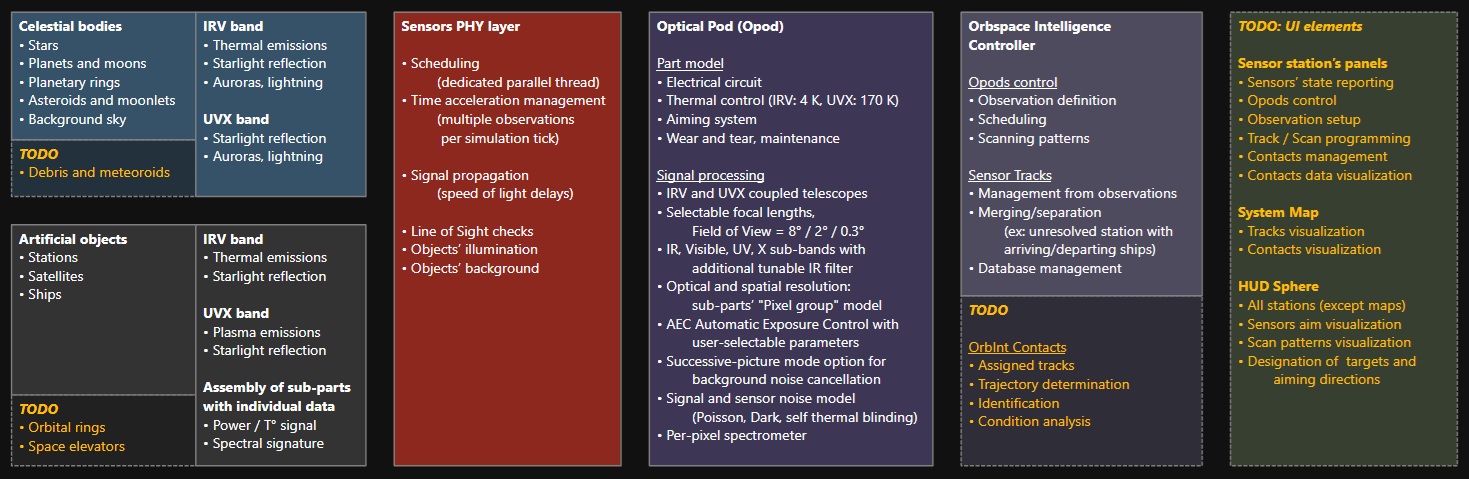

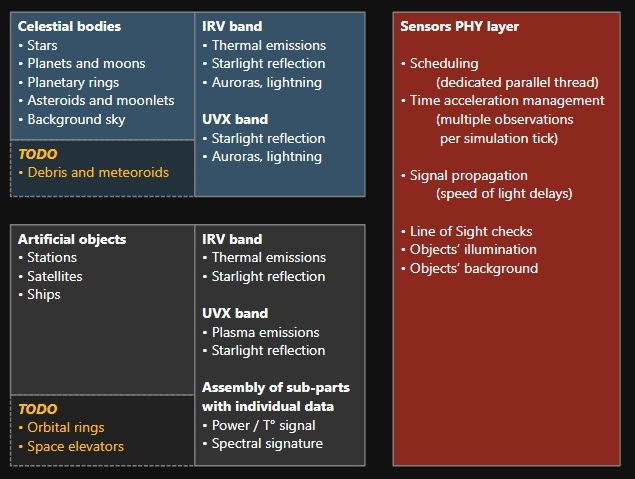

Signal Sources

The model now takes the sky’s average background brightness into account, with higher values towards the galaxy disc and central bulge.

The NPC ships have been fitted with their “SensorTarget” view. That model is dynamic reflecting their flight, and can answer queries for any time in the past, which is necessary given propagation delays at long ranges.

Another addition is a spectral signature for all sub-parts in artificial objects. This data represents the spectral characteristics of each part’s material (the color in the visible spectrum is an example) in an abstracted way, this to be able to perform a spectroscopic analysis of the received light. This data will serve in the target identification and attitude determination processes.

Sensor PHY layer

The PHY layer in charge of managing the interactions between the observing sensors and the observable objects is now complete. It can now handle distinct point of views (for instance from the ship and a companion surveillance drone) and multiple observations per simulation tick. The latter modification was necessary to avoid a considerable limitation of the observation frequency at high time acceleration (x 2000 leads to 32 s ticks at 60 fps).

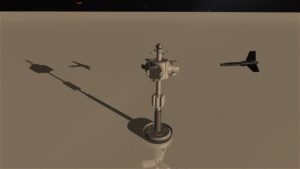

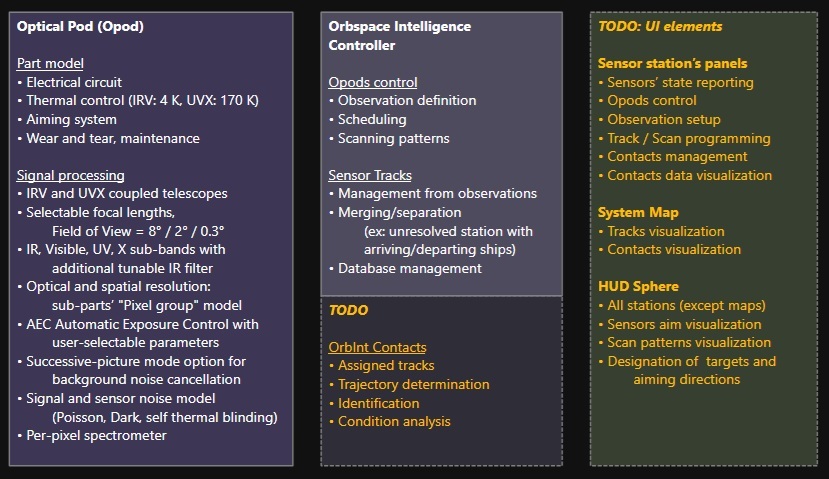

Optical Pods

The ship’s optical pods are now ready for a proper on-field validation. Besides their part model that is similar in nature to the rest of the ship’s equipment, they feature the signal processing functions to convert the raw inputs from the PHY layer into “optical track samples” that can be treated by the Orbspace Intelligence Controller.

Without going into too many details while waiting for the dedicated devlog, the implementation revolves around a “pixel group” model, and that tries to emulate the operation of imaging sensors as “photons integrators”. The goals for this arguably very low-level implementation are twofold:

- Trying to properly model observations in all circumstances (dark / bright targets with dark / bright backgrounds, sub-pixel or resolved etc…)

- Requiring a set of user-selected parameters that properly reflect the optical nature of the sensor (FoV selection, filters, exposure control…) and that need to be adjusted depending on the tactical situation.

Orbspace Intelligence Controller

The OIC is the ship’s high-level system in charge of the sensors. It will receive much work in future update but can now pilot the opods and process their returns into “Optical Tracks“. These represent the source data attached to “Contacts” and that will be analyzed to extract information about the target (Trajectory determination, Identification, Condition analysis).

TODO (2026-02)

The development will now focus on the UI elements to control the pods and visualize their optical tracks output so that they can be properly debugged and validated. Then from then on higher level analysis functions will be progressively added.

Thanks for reading! 🧑🔧